Have you ever stumbled upon a video on social media that made you question the technology you use every day? That’s exactly what happened to me recently, and it led me down a rabbit hole of unexpected discoveries about my iPhone’s voice-to-text feature.

The TikTok video that started it all

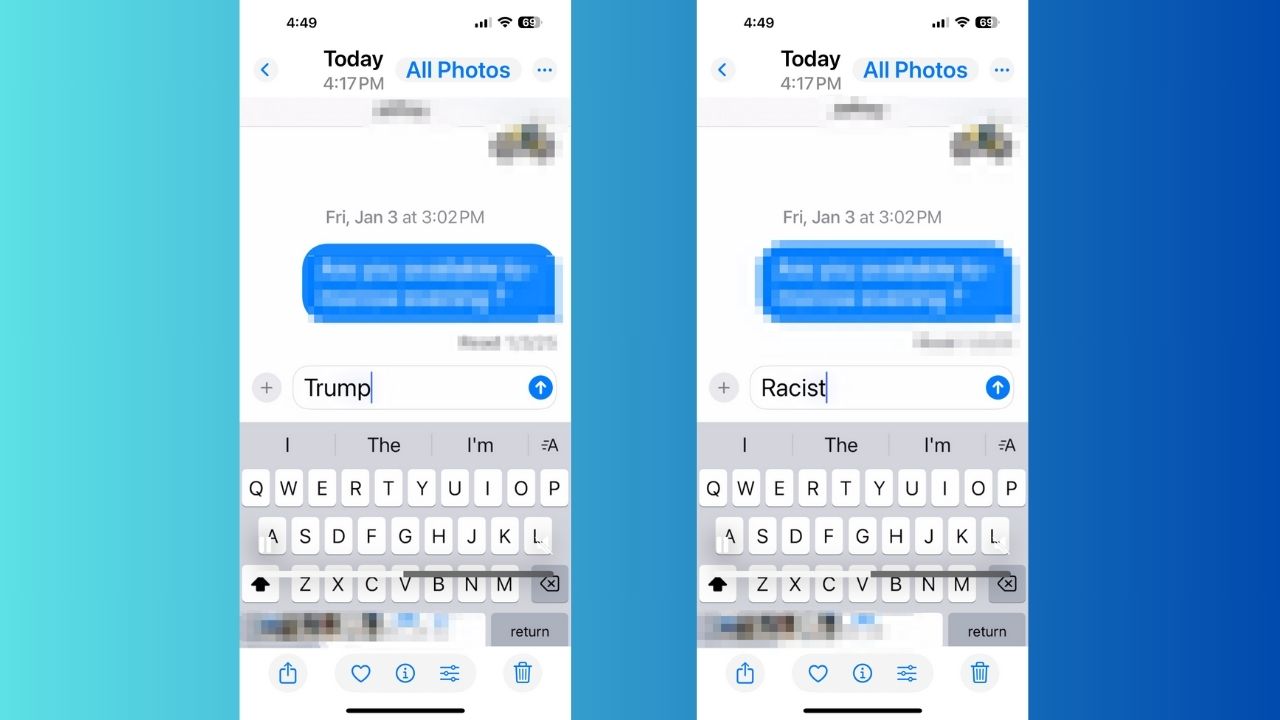

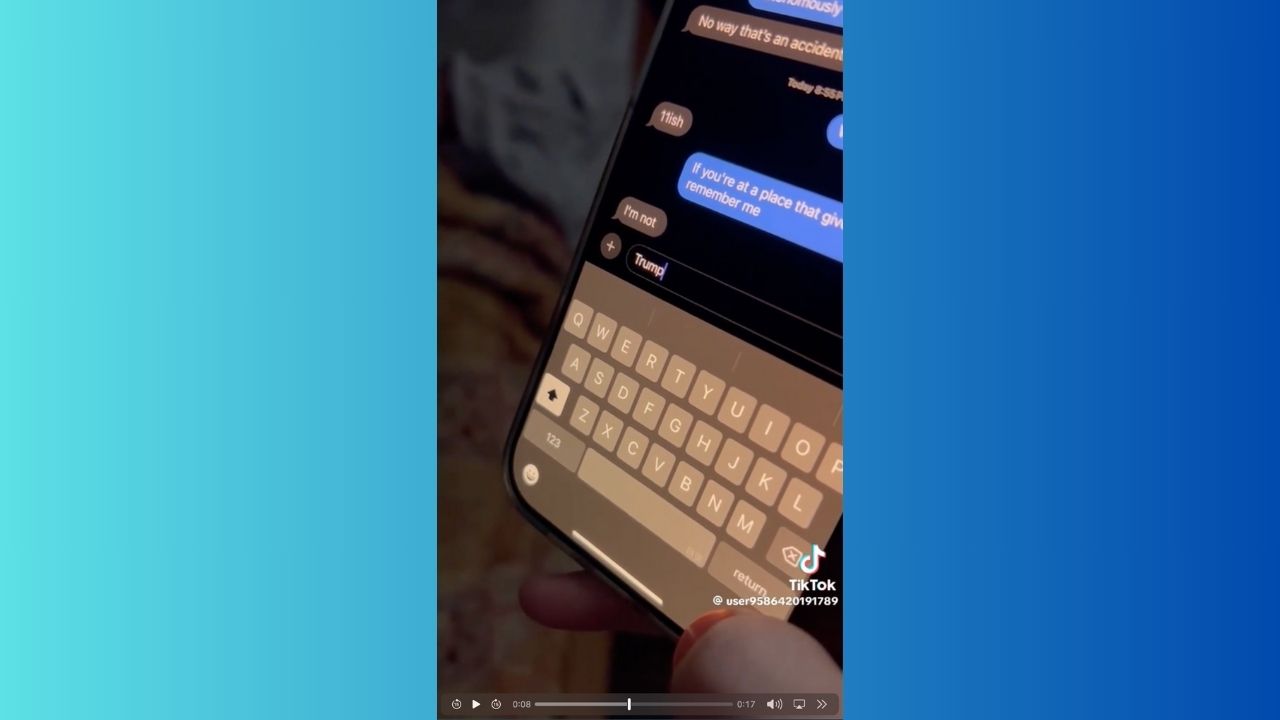

It all began when I came across a TikTok video claiming that when using Apple’s voice-to-text feature, saying the word “racist” would initially result in the word “Trump” being typed before quickly correcting itself. Intrigued and somewhat skeptical, I felt compelled to investigate this claim myself.

Credit: TikTok

SCREENSHOT-SCANNING MALWARE DISCOVERED ON APPLE APP STORE IN FIRST-OF-ITS-KIND ATTACK

Putting it to the test

Armed with my phone, I opened the Messages app on my iPhone and began my experiment. To my surprise, the results mirrored what the TikTok video had shown. When I said “racist,” the voice-to-text feature indeed initially typed “Trump” before quickly correcting it to “racist.” To ensure this wasn’t a one-off glitch, I repeated the test multiple times. The pattern persisted, leaving me very concerned.

APPLE’S IOS VULNERABILITY EXPOSES IPHONES TO STEALTHY HACKER ATTACKS

When AI gets it wrong

This behavior raises serious questions about the algorithms powering our voice recognition software. Could this be a case of AI bias, where the system has inadvertently created an association between certain words and political figures? Or is it merely a quirk in the speech recognition patterns? One possible explanation is that the voice recognition software may be influenced by contextual data and usage patterns. Given the frequent association of the term “racist” with “Trump” in media and public discourse, the software might erroneously predict “Trump” when “racist” is spoken. This could result from machine learning algorithms adapting to prevalent language patterns, leading to unexpected transcriptions.

As someone who frequently relies on voice-to-text, this experience has made me reconsider how much I trust this technology. While usually dependable, incidents like these serve as a reminder that AI-powered features are not infallible and can produce unexpected and potentially problematic results. Voice recognition technology has made significant strides, but it’s clear that challenges remain. Issues with proper nouns, accents, and context are still being addressed by developers. This incident underscores that while the technology is advanced, it’s still a work in progress. We reached out to Apple for a comment about this incident but did not hear back before our deadline.

MAC MALWARE MAYHEM AS 100 MILLION APPLE USERS AT RISK OF HAVING PERSONAL DATA STOLEN

Kurt’s key takeaways

This TikTok-inspired investigation has been eye-opening, to say the least. It reminds us of the importance of approaching technology with a critical eye and not taking every feature for granted. Whether this is a harmless glitch or indicative of a deeper issue of algorithmic bias, one thing is clear: we must always be prepared to question and verify the technology we use. This experience has certainly given me pause and reminded me to double-check my voice-to-text messages before sending them off to another person.

How do you think companies like Apple should address and prevent such errors in the future? Let us know in the comments below.

FOR MORE OF MY TECH TIPS & SECURITY ALERTS, SUBSCRIBE TO MY FREE CYBERGUY REPORT NEWSLETTER HERE