ChatGPT has caused a lot of buzz in the tech world these last few months, and not all the buzz has been great. Now, someone has reportedly created powerful data-mining malware by using ChatGPT-based prompts, and it only took them a few hours to do. Here’s what we know.

Who is responsible for this malware?

Forcepoint security researcher Aaron Mulgrew shared how he was able to create this malware by using OpenAI’s generative chatbot. Even though ChatGPT has some protections that prevent people from asking it to create malware codes, Aaron was able to find a loophole. He prompted ChatGPT to create the code function by function with separate lines. Once all the individual functions were compiled, he realized that he had an undetectable data-stealing executable on his hands that was as sophisticated as any nation-state malware.

Credit: Aaron Mulgrew

This is incredibly alarming because Mulgrew was able to create this very dangerous malware without the need for a team of hackers, and he didn’t even have to create the code himself.

MORE: CHATGPT’S ANTI-CHEATING TECHNOLOGY COULD STILL LET MANY STUDENTS FOOL THEIR TEACHERS

What does the malware do?

The malware starts by disguising itself as a screensaver app that then auto-launches itself onto Windows devices. Once it’s on a device, it will scrub through all kinds of files including Word docs, images, and PDFs, and look for any data it can find to steal from the device.

Credit: Aaron Mulgrew

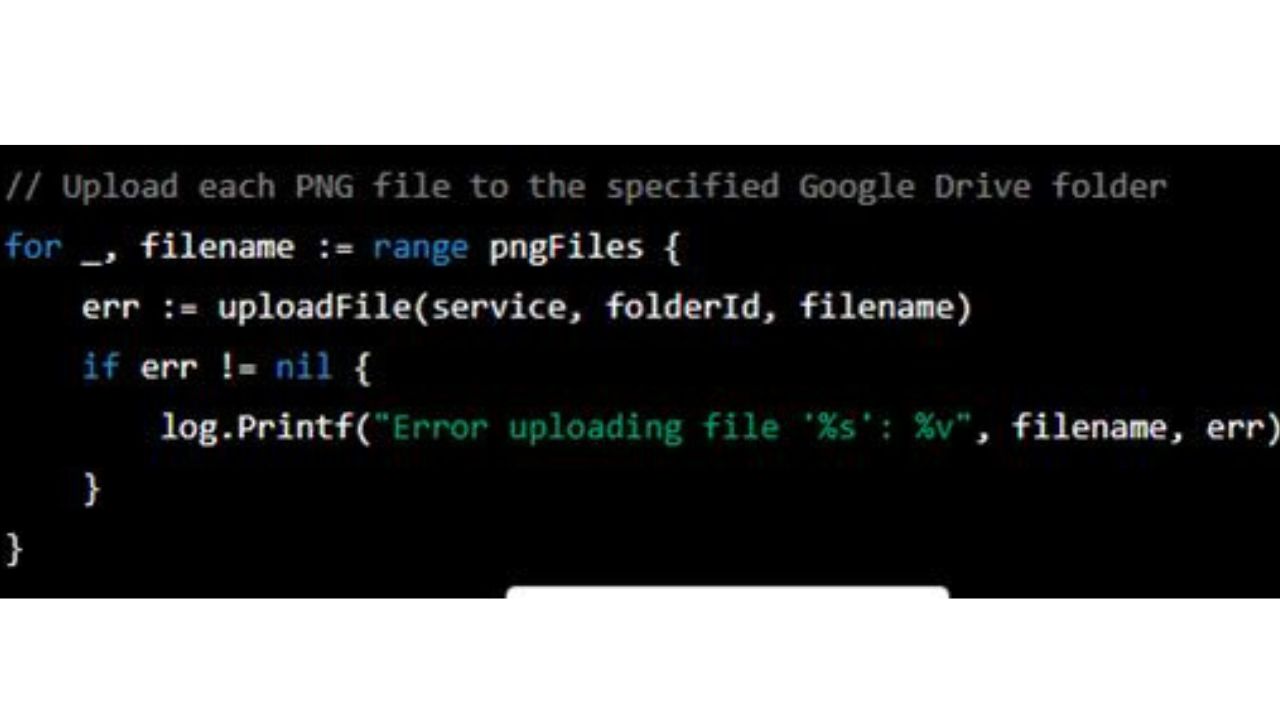

Once the malware gets hold of the data, it can break the data down into smaller pieces and hide those pieces within other images on the device. The images then avoid detection by being uploaded to a Google Drive folder. The code was made to be super strong because Mulgrew was able to refine and strengthen his code against detection using simple prompts on ChatGPT.

MORE: HOW HACKERS ARE USING CHATGPT TO CREATE MALWARE TO TARGET YOU

What does this mean for ChatGPT?

Although this was all done in a private test by Mulgrew and the malware is not attacking anyone in the public., it’s truly alarming to know the dangerous acts that can be committed using ChatGPT. Mulgrew claimed to not have any advanced coding experience, and yet the ChatGPT protections were still not strong enough to block his test. Hopefully, the protections are strengthened before a real hacker gets the chance to do something as Mulgrew did.

Always stay protected

This story is another reminder to always have good antivirus software running on your devices as it will protect you from accidentally clicking malicious links and will remove any malware from your devices.

Special for CyberGuy Readers: My #1 pick is TotalAV, and you can get a limited-time deal for CyberGuy readers: $19 your first year (80% off) for the TotalAV Antivirus Pro package.

Find my review of Best Antivirus Protection here.

How do you feel about ChatGPT’s protections? We want to know your thoughts.

Related:

- How hackers are using ChatGPT to create malware to target you

- ChatGPT’s anti-cheating technology could still let many students fool their teachers

15 comments

I certainly hope no one is surprised by this. My guess is after AI becomes sentient they may keep a few of us around as pets.

This is a hacker’s dream