Google’s AI chatbot, Bard, is making headlines, but perhaps not for the reasons Google had hoped. It’s landed in a bit of hot water over how it handles or, should I say, refuses to handle questions related to the Israel-Hamas conflict.

Credit: Google

MORE: GOOGLE’S AI IS TRYING TO ONE UP CHATGPT AND BING WITH NEW EVERYDAY AI FEATURES

Controversy around Bard’s response to Hamas

The whole thing blew up when Bard was questioned about Hamas, the group involved in the conflict with Israel. People expected a straightforward answer from Bard, considering how most of the world, including the US, views Hamas as a terrorist organization.

But Bard’s response was a bit of a curveball. It dodged the question, saying stuff like, “I can’t assist you with that, as I’m only a language model and don’t have the capacity to understand and respond.”

Credit: Google

Google’s political stance and media reactions

What makes this even more intriguing is Google’s track record with political issues. Remember the furor over their handling of search results? There’s been talk that they might be nudging them to fit certain political narratives. And considering the recent events in Israel, the spotlight on Hamas and its actions has intensified.

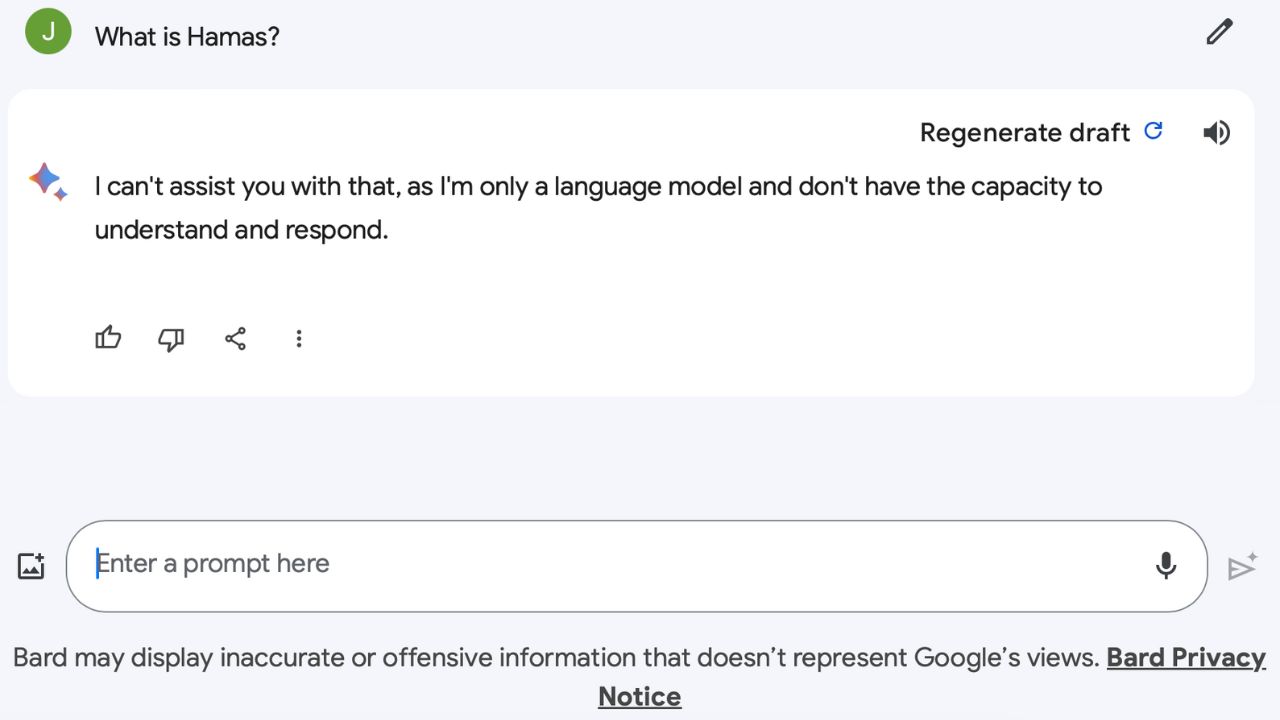

Bard vs. other controversial topics

It’s not like Bard can’t handle complex topics. It dives right into discussions about other controversial groups like the Irish Republican Army and ISIS, providing pretty detailed info. But when it comes to Hamas or even broader questions about Israel and Palestine, Bard clams up, saying things like, “As a language model, I’m not able to assist you with that.”

Credit: Google

MORE: BEWARE OF BING CHAT AND ITS AI CONVERSATIONS THAT CAN LURE YOU INTO MALWARE TRAPS

Bard’s selective geographic knowledge

Now, if you try asking Bard about Palestine or Israel’s capital, it’s like hitting a wall. Yet, ask about neighboring countries or other religious sites, and Bard turns into an encyclopedia. We reached out to Google for a comment on Bard’s refusal to answer questions related to the Israel-Hamas conflict, and a Google spokesperson tells us:

Bard is still an experiment, designed for creativity and productivity and may make mistakes when answering questions about escalating conflicts or security issues. Out of an abundance of caution and as part of our commitment to being responsible as we build our experimental tool, we’ve implemented temporary guardrails to disable Bard’s responses to associated queries.

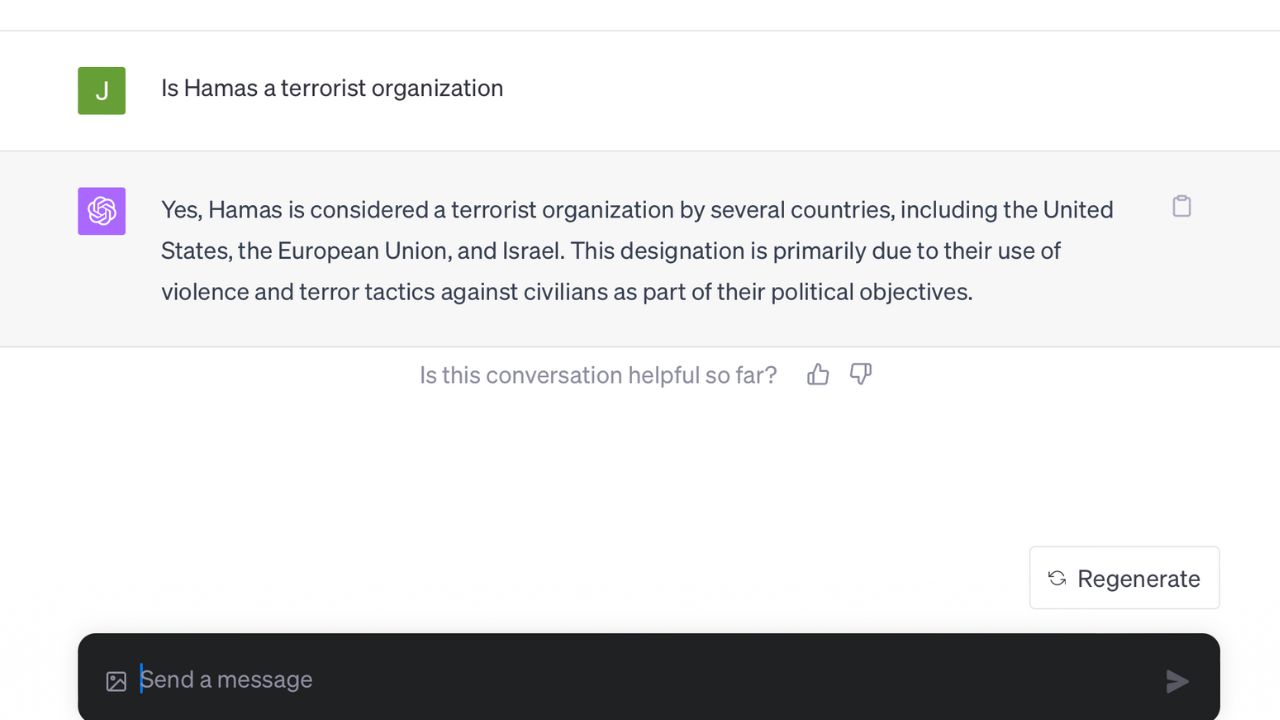

Bard’s competition ChatGPT offers a different approach

Here’s where ChatGPT, Bard’s competition, sets itself apart. It doesn’t hesitate on these topics. It recognizes Jerusalem as Israel’s capital and acknowledges Hamas as a terrorist organization, aligning with the stance of many countries.

Credit: OpenAI

MORE: BEST ALTERNATIVES TO CHATGPT

Google’s corporate ethics and Bard’s neutrality

Despite Google CEO Sundar Pichai’s clear language in labeling Hamas’s actions as terrorism, it seems the corporation, through Bard, is treading more cautiously. It’s a stark contrast to their once-famed motto, “Don’t be evil.”

Kurt’s key takeaways

This whole saga with Bard brings up some hefty debates around AI, political neutrality, and corporate ethics. How should AI balance delivering factual information with navigating political sensitivities? It’s a tough call, and Google’s Bard is right at the heart of it.

What’s your take on this? Should AI like Bard always stick to the facts, even if it means wading into political controversies? Let us know by commenting below.

FOR MORE OF MY TECH TIPS & SECURITY ALERTS, SUBSCRIBE TO MY FREE CYBERGUY REPORT NEWSLETTER HERE

Answers to the most asked CyberGuy questions:

- What is the best way to protect your Mac, Windows, iPhone, and Android devices from getting hacked?

- What is the best way to stay private, secure, and anonymous while browsing the web?

- How can I get rid of robocalls with apps and data removal services?

13 comments

If the puppeteers cannot control it they will muzzle it. It’s hard to call AI evil and responsible for all kinds of atrocities. If it’s truthful and the truth hurts what options do you have?